The Business

Based in the Netherlands, Nebul is a leading European private and sovereign AI cloud provider that delivers world-class AI supercomputing and GPU-accelerated business computing services that ensure EU data sovereignty, privacy, and compliance.

Unlike hyperscalers, which offer generic AI solutions, Nebul provides industry-specific AI models already fine-tuned on industry data, making it faster and easier for customers to build AI applications for healthcare, government, and other industries. Nebul can also tailor AI models for specific customer needs. The company is an NVIDIA Elite DGX & HGX Cloud Provider, Cloud Partner, and Solution Provider.

Complexity of a Full-Stack, End-to-End AI Solution

Despite in-house expertise, Nebul faced challenges delivering seamless allocation and multi-tenancy provisioning for their full-stack AI Cloud. Their platform integrated workload orchestration, NVIDIA operators, data fabric, and databases with AI software stacks and cloud infrastructure—all requiring extensive coordination and maintenance.

Their private AI Cloud uses Kubernetes for container orchestration and multiple generations of NVIDIA GPU, CPU, and DPU clusters. These clusters integrate Ethernet, InfiniBand, NVIDIA NVLink networks, and diverse storage types. For virtual machines, Nebul is transitioning from VMware to Mirantis OpenStack for Kubernetes, containerizing their infrastructure-as-a-service. Managing this complex stack required heavy operational effort.

A “Shared Nothing” Approach to Data Privacy

To improve efficiency, Nebul considered Kubernetes multi-tenancy, but found it insufficient for their privacy and isolation needs. Instead, they chose a “shared-nothing” model by deploying a dedicated cluster per customer. This guaranteed security but created Kubernetes sprawl, which became costly and labor-intensive.

Unifying Kubernetes Clusters to Streamline Management

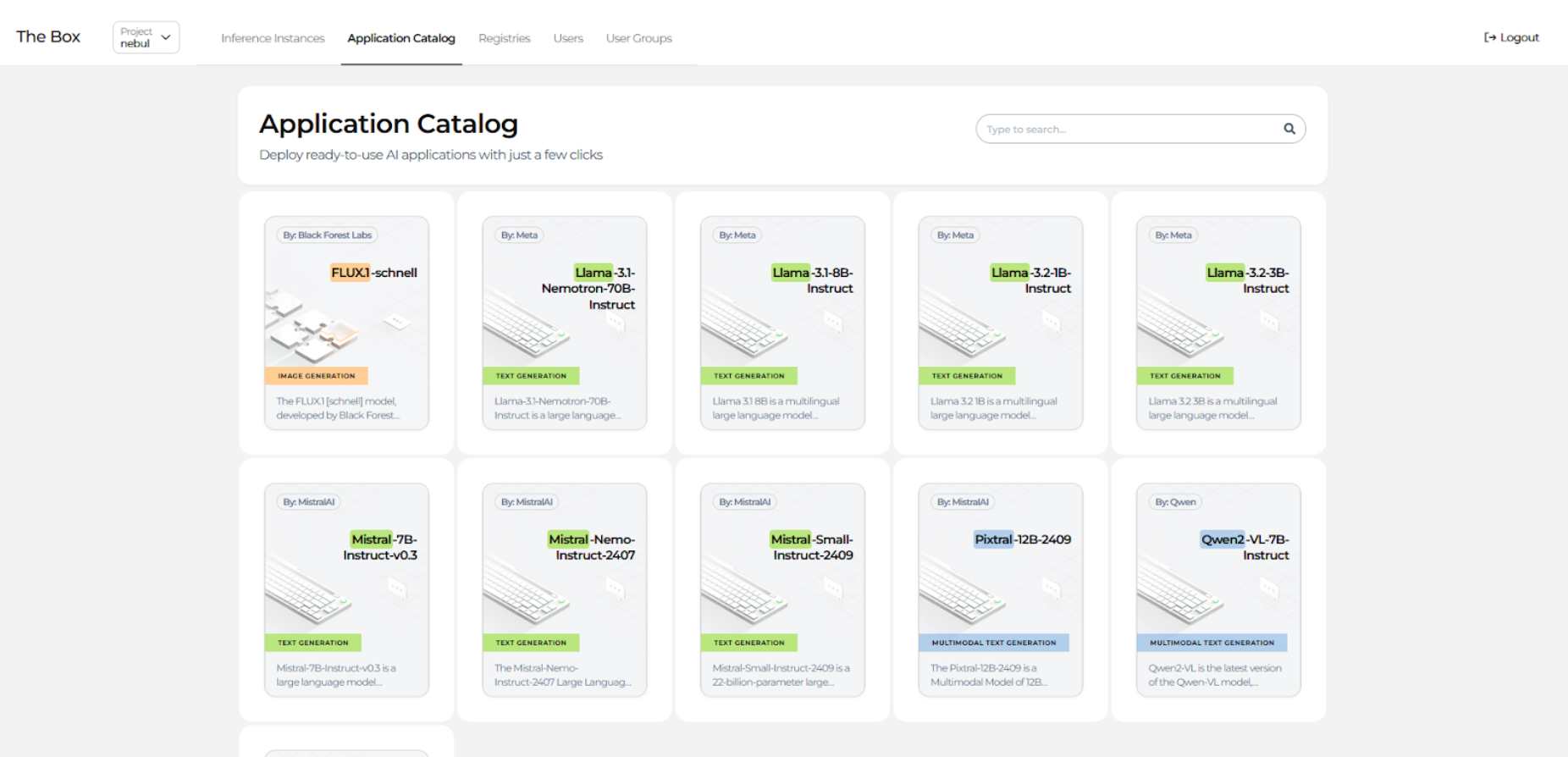

To address this, Nebul deployed Mirantis k0rdent AI, an enterprise-grade infrastructure solution for platform architects and MLOps engineers. It’s built on the open source k0rdent project and enables centralized management of AI infrastructure across public cloud, on-prem, hybrid, and edge environments.

Simplified Operations for AI Infrastructure

Mirantis k0rdent AI provided pre-validated templates for provisioning clusters and integrating networking, storage, and security services from a broad ecosystem of cloud-native technologies. It also includes FinOps and observability tools to manage cost and performance while minimizing sprawl.

“As demand for AI services grows, our challenge was transitioning our existing infrastructure,” said Arnold Juffer, CEO and founder at Nebul. “Using k0rdent enables us to effectively unify our diverse infrastructure across OpenStack, bare metal Kubernetes, while sunsetting the VMware technology stack and fully transforming to open source to streamline operations and accelerate our shift to Inference-as-a-Service for enterprise customers. Now, they can bring their trained AI model to their data and just run it, with assurance of privacy and sovereignty in accordance with regulations. It’s as simple as that.”

Scalable Growth

With Mirantis k0rdent AI, Nebul now has the operational efficiency and scalability needed to expand to additional data centers across Europe—helping more organizations embrace AI while complying with regional privacy, security, and sovereignty laws.

Want even more information on how OpenStack can be an alternative to VMware and how the migration works? Check out the Migration Guide, live now!

)