In the context of evolving cloud infrastructures, OpenStack stands out as a major open-source platform for managing and orchestrating virtualized environments. To ensure the efficient and reliable distribution of network traffic between different resources, the implementation of a load balancing service is essential.

Octavia, a native OpenStack component, offers a scalable and dynamic load balancing solution capable of managing fleets of virtual instances called “amphorae” to ensure high application availability

This report presents the integration of the Octavia service into an OpenStack deployment, detailing the configuration steps, the creation of necessary networks and instances, as well as the mechanisms guaranteeing the proper functioning of the load balancer. The objective is to demonstrate how Octavia contributes to improving the reliability and performance of services hosted on OpenStack through automated and adaptable load balancing.

Chapter 1: Load Balancing and the Cloud

1.1 Definition of Load Balancing

Load balancing is the process of distributing workloads and network traffic across multiple servers. This process maximizes resource utilization, reduces response times, and avoids overloading a single server.

A load balancer acts as an intermediary between client devices and backend servers, ensuring that requests are directed to the most appropriate server. Traffic is distributed based on different criteria:

Round Robin: Requests are sent sequentially to each server in turn.

Least Connections: Requests are directed to the server with the fewest active connections.

IP Hash: Requests are routed based on the client’s IP address to ensure session persistence.

A load balancer can be a hardware device, a software application, or a cloud service.

Hardware Load Balancers: Physical devices used in high-performance data centers.

Software Load Balancers: Solutions installed on servers or containers, offering greater flexibility.

Cloud Load Balancers: Solutions provided by cloud providers, offering scalability and ease of use.

Handling Client Requests: Receiving requests from client devices.

Traffic Distribution: Distributing requests using a selected algorithm.

Health Verification: Checking server health status before routing.

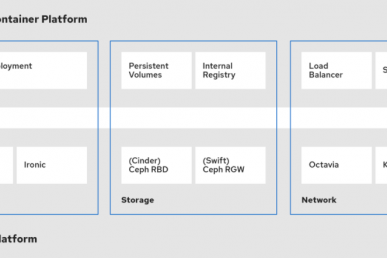

Neutron: Ensures network connectivity between Amphorae and tenant networks.

Barbican: Manages TLS certificates.

Keystone: Handles authentication.

Glance: Stores the Amphora VM images.

- API Controller: Receives and sanitizes API requests.

- Controller Worker: Executes necessary actions.

- Health Manager: Monitors Amphora health and handles failover.

- Housekeeping Manager: Cleans up database records and rotates certificates.

- Driver Agent: Receives statistics from drivers

2.4 Functional Architecture

Load Balancer: Occupies a Neutron network port and has a VIP (Virtual IP).

Listener: Defines the port/protocol the LB listens on (e.g., HTTP Port 80).

Pool: A list of members serving content.

Health Monitor: Checks member status and redirects traffic away from unresponsive servers.

Port 9443 (Amphora): Used for control/management via HTTPS between the Octavia Controller and the Amphora.

LB Management Network: Connects Octavia services to Amphorae. It carries VRRP traffic for High Availability (Master/Backup).

VIP Network: Entry point for user traffic, hosting the Virtual IP.

Tenant Network: Connects the final backend instances (VMs).

Chapter 3: Integration of Octavia Service in an OpenStack Deployment

We integrated Octavia into an All-in-One OpenStack deployment using Kolla Ansible. This method creates a VXLAN network used as the load balancer management network (lb_mgmt_network).

In our case, we integrated the Octavia module into an OpenStack deployment using the All-in-One mode. This mode was chosen initially to better understand how the Octavia service works.

It is important to note that there are several methods to integrate Octavia into an OpenStack environment, depending on the level of complexity, the target environment (development, testing, or production), and the type of network being used.

For our deployment, we opted for the method provided by Kolla Ansible for development or testing environments, which enables a simplified configuration of Octavia networking when using the Neutron ML2 driver with Open vSwitch. This method automatically creates a tenant network and configures the Octavia control services to access it.

When this method is enabled, Kolla Ansible automatically creates a VXLAN network that is used as the load balancer management network (lb_mgmt_network). This network allows Octavia control services to communicate with amphora instances (load balancer VMs).

To use this method and properly integrate the Octavia service into our deployment, we simply need to modify the globals.yml file.

Below is our globals.yml file.

To verify that the Octavia service was functioning correctly, we created an external network, an internal network, a router, and of course an instance that would later serve as a backend instance for a pool.

In order to ensure that the Octavia amphora could access this instance, we had to attach the Octavia management network (lb_mgmt_network) to our router.

After this configuration, it is simply a matter of creating the load balancer. As you can see, our LB is in a healthy state.

Conclusion :

To conclude this first part, we have successfully integrated the Octavia load balancing service into our OpenStack All-in-One deployment using Kolla Ansible. We established the theoretical foundations of load balancing and detailed Octavia’s architecture, specifically the role of Amphorae and the critical network topologies required for its operation. The practical phase allowed us to configure the necessary management networks (specifically lb_mgmt_network) and verify that the Load Balancer was correctly provisioned and in an Active state. This confirms that the infrastructure is ready to handle traffic.

- Integration of the Octavia module (Load Balancer as a Service) in an OpenStack Cloud Environment – Part 2 - December 7, 2025

- Integration of the Octavia module (Load Balancer as a Service) in an OpenStack cloud environment – Part 1 - December 7, 2025

- How to Implement an OpenStack-Based Private Cloud with Kolla-Ansible – Part 2 - February 13, 2025

)